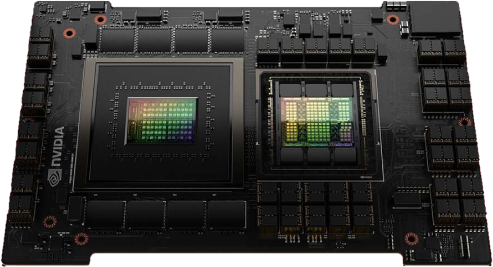

NVIDIA GH200 ARM64 Platform

Purpose-built for air-gapped AI cybersecurity operations

Purpose-built for air-gapped AI cybersecurity operations

The GH200 platform with 144GB VRAM enables Eymbr to deliver enterprise-grade AI capabilities in a single-node footprint while maintaining complete air-gapped security

Leverage 144GB VRAM and 432GB total unified memory for unprecedented context retention during complex investigations - massive GPU memory in a compact form factor.

Instant semantic search across conversations, documents, and training materials.

Keep your team's knowledge and training materials in GPU memory for instant access.

Intelligent allocation of GPU resources between AI models and data storage.

Run the powerful Qwen3-32B model entirely on-device for complete data security.

Concurrent operation of language and embedding models for comprehensive analysis.

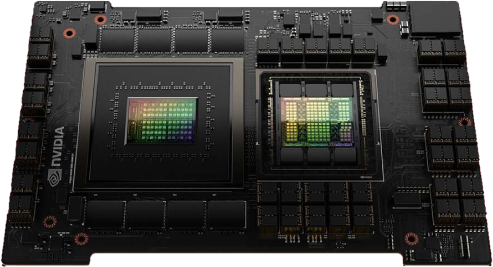

| Processing Architecture | |

| CPU | NVIDIA Grace CPU - 72x Arm Neoverse V2 cores |

| GPU | NVIDIA H100 Tensor Core GPU |

| Architecture | ARM64 (aarch64) |

| Interconnect | NVLink-C2C @ 900 GB/s bidirectional |

| Memory Configuration | |

| Total Memory | 432GB unified memory |

| GPU Memory (VRAM) | 144GB HBM3 - massive VRAM in a single node |

| Memory Bandwidth | 900 GB/s GPU memory bandwidth |

| Cache Coherent | Yes - CPU and GPU share memory space |

| ECC Support | Full ECC protection |

| AI Performance | |

| FP8 Performance | 3,958 TFLOPS with sparsity |

| FP16 Performance | 1,979 TFLOPS with sparsity |

| Tensor Cores | 4th Generation with Transformer Engine |

| MIG Support | Multi-Instance GPU capability |

| Software Requirements | |

| Operating System | Ubuntu 22.04+ (ARM64) |

| CUDA Version | 12.0 or higher |

| Python | 3.10+ |

| Storage | 100GB+ SSD recommended |

| Security Features | |

| Confidential Computing | Hardware-based security |

| Secure Boot | UEFI secure boot support |

| Memory Encryption | Available with CC mode |

| Air-Gap Ready | No external dependencies |

Learn more about how the NVIDIA Grace Hopper Superchip enables Eymbr AI's capabilities